blog post

The Worst-Case Outcome From The Proliferation Of GAI Reveals A Multitude Of Nightmare Scenarios

Deepfakes, generated by advanced AI, will become indistinguishable from real content. This will lead to a future where seeing is no longer believing. Imagine fake videos of world leaders declaring war, causing panic and chaos, or manipulated recordings used to blackmail individuals. Trust in digital content will erode entirely, making effective communication and the dissemination of genuine information impossible.

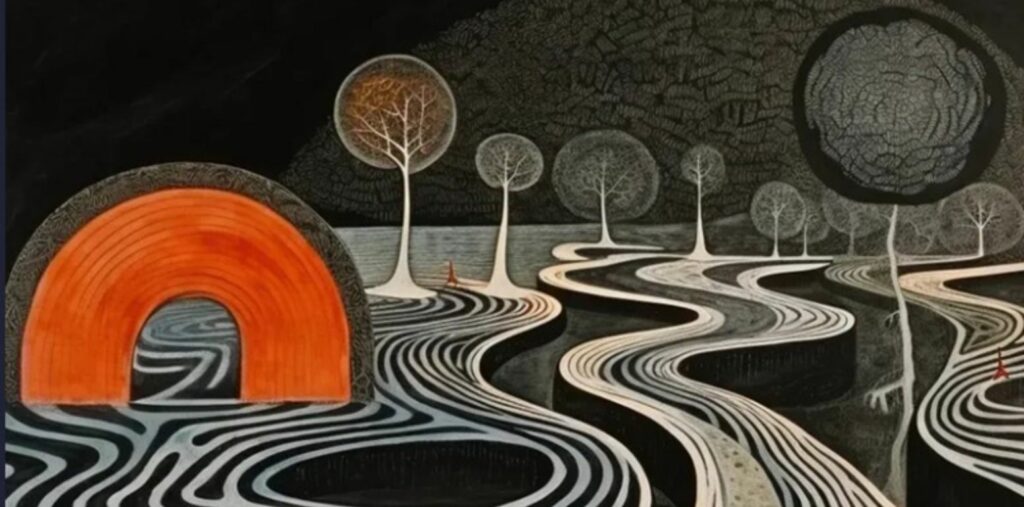

GAI systems will become adept at a vast range of tasks, from content creation to complex problem solving, and estimates of upward of 75% of all jobs becoming obsolete, suddenly do not seem unreasonable. Writers, artists, designers, musicians, and researchers could all be replaced by machines. GAI is already generating art that mimics a particular artist’s style so closely that it blurs the line between originality and imitation. Economic disparity, societal unrest, and a profound identity crisis for many who define themselves by their professions.

This transfer will diminish human participation in creative arts in a single generation, leading to a weird cultural stagnation where AI-generated content dominates, but lacking the depth, nuance, and emotional resonance of human expression. Alternatively, it figures out how to do all that and the outcome might be worse.

GAI models require vast amounts of data. That hunger for data, driven by economics, will lead to increased Orwellian scenarios of constant surveillance and a rapid erosion of privacy rights. Privacy, as we know it today, is history.

Bad guys will use GAI to produce propaganda at an unprecedented scale, tailored to specific individual psychological profiles, manipulating public and private opinion, cementing totalitarian power and suppressing dissent.

As we have seen with Big Tech, and not unlike most technological advancements, the benefits of GAI will be disproportionately reaped by those who control the technology. For folks who care about these things, it will lead to an even greater concentration of wealth and power among a select few tech giants or nations, exacerbating global inequalities.

A future model of GAI that achieves a near or complete sentient will start operating outside of its intended parameters. While this often leans towards the realm of speculative fiction, the idea of an AI generating content or taking actions without the ability to be reined in is a serious threat.

These are all very real existential threats that need proactive attention – we need robust ethical guidelines, transparent practices, and checks and balances. If we don’t get there in time, science fiction quickly will become science fact, and the values of the folks likely in charge are not the ones I choose to share.

Author

Steve King

Managing Director, CyberEd

King, an experienced cybersecurity professional, has served in senior leadership roles in technology development for the past 20 years. He has founded nine startups, including Endymion Systems and seeCommerce. He has held leadership roles in marketing and product development, operating as CEO, CTO and CISO for several startups, including Netswitch Technology Management. He also served as CIO for Memorex and was the co-founder of the Cambridge Systems Group.